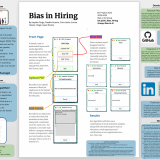

Bias in Hiring Natalie Huante, Hayden Fargo, Chris Isidro, Emma Harper, Diego Lopez Ramos

May 12, 2022

Current algorithms meant to function without bias commonly inherit the biases of their programmers. This results from various issues such as the inclusion of traits like race, gender, nationality, etc. In our project, we look specifically at how the use of these algorithms affects the hiring process. In an attempt to reduce bias, we created our own algorithm that does not take into account characteristics such as race, gender, or sex. While this is a simplified version compared to other algorithms currently in use, our application can be built upon for further improvement.