Interterm Robot Attack! Student Transformers in the Folino

January 17, 2014

Last week, I had the exciting chance to see our brand-new motion capture suits transform a living, breathing Dodge College student into a robot. A

big

robot.

It was only the second day of their Interterm boot camp, “Motion Capture Fundamentals, Techniques, and Practical Exercises,” and yet I don’t think anyone in the room was prepared for the kind of magic we witnessed.

First, we got some context about what we were about to see by listening to commentary by the

Lord of the Rings

team responsible for animating the revolutionary character of Gollum. While describing some very clever, creative tricks they used to manufacture the all-digital character, they also described the expensive, awkward obstacles they encountered using what’s known as “optical motion capture” suits.

Our older motion capture suits required actors and directors to work in one isolated room, in a full-body suit.

Turns out, these are the same kind that we currently have installed in the Motion Capture Studio — the ones that require an array of cameras mounted on walls, with high-bandwidth connections and computers to synthesize all the data. Not to mention that, of course, the actors must divorce themselves from the scene by acting on an empty set, with no props and few visual cues.

But there’s another, newer kind of motion capture, referred to as “gyroscopic motion capture” that allows actors’ movements to be catalogued by the computers while freely moving and acting in real spaces, with no cameras required. Our new suits, work by allowing the sensors covering it to continually broadcast their location in space, including their orientation, velocity, and charge level, which provide data the computer uses to model the skeletal structure of the actor. Amazingly, this model can then be applied to any number of non-human, computer-generated bodies, to give them the impression of lifelike motion.

Today’s current suits are much lighter, small, and fit more body sizes. It doesn’t look uncomfortable!

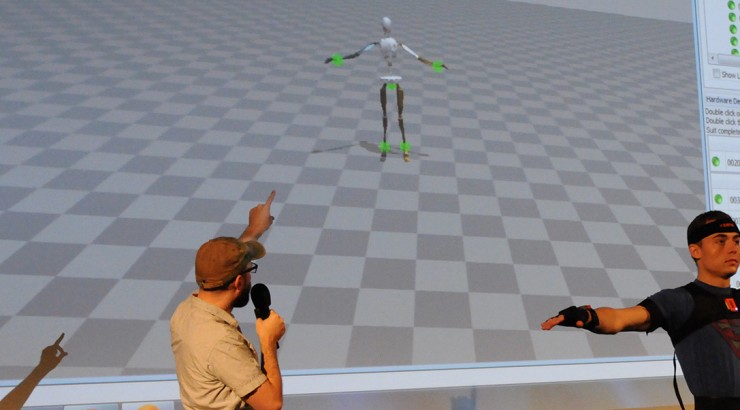

So after watching the commentary, and selecting a student actor, who was outfitted with a wetsuit-like outfit with more than a dozen small sensors attached to it, we got to see this setup come to life — almost literally.

I’ve seen motion capture before, but there’s always been something so artificial about it, something so unlike other kinds of filming, that it’s hard to compare them. But, for the first time, I got to see an actor looking truly natural on stage, as he mimicked the stiff, jerky motions of the large robot he would soon be inhabiting with surprising ease. Although he broke the cardinal rule a few times (“don’t watch yourself moving on the screen! It’s like looking down when you’re on a cliff”) he clearly didn’t have to alter much about his performance for the technology to capture it, something that seemed to frustrate Gollum’s Andy Serkis to no end.

But it wasn’t until the student stepped down off the stage, that we all realized how impressive this technology really was. We watched the computer model on screen adjust to his movements as he stepped down the stairs, walked up the ramp, turned to join us in the audience, motion suit still recording. We watched his ghostly skeleton hover in the air in a seated pose – as we all were, of course – and watching him float above the ground, it really hit home that this was true freedom of movement.

I’m working on taking some of the footage from that demonstration to cut into a short video to show off how cool this technology is, so stay tuned to the blog to watch our actual student-robot transformation! But for now, take a look at how it all appeared:

- The motion capture software (onscreen) captures every movement he makes, in real time.

- The sensors produce a huge amount of data, as you can see on the sides of the screen, but using it seems intuitive.

- Here we see 3DS MAX, the software tool we use to create the forms which will be attached to the mocapped skeleton.

- The robot-student, stepping around his virtual world. The marks below him are the steps recorded by the mocap suit.